[ I’m breaking from my usual technical posts, since I have some wonderful news to share with you all; opinions mine, not those of my employer, etc. ]

For readers of elevated left-leaning publications such as the Guardian, one of the more remarkable discoveries one might make is that the sources of environmental devastation are not what you thought they were. Dim-witted people such as me might fixate on things like “heating and cooling”, agriculture (particularly the production of meat), and “ground and air transport” as significant sources of resource use and global warming. However, I bring my fellow members of the upper middle class good news. Fear not! It turns out (https://jsomers.net/blog/it-turns-out), that endless trips to Europe, our large cars, our big houses and our luxurious diets are in fact not the sources of global devastation we might have feared.

I bring good news: none of these things are that significant. Nearly all major sources of environmental devastation are perfectly aligned with things that, in the rigorous language of the social sciences, “give us the ick”.

There’s no need to change your behaviour in any way. One merely needs to avoid a few “icky” things – the list changes all the time, but for now, merely avoiding using “generative AI” and “fast fashion” suffices to save the planet. If you doubt me, go ahead and review the endless articles about how “AI is cooking the planet”. Alternately, you can write one yourself. I provide a helpful guide to some valuable techniques to write one of these planet-saving articles. These techniques will be just as valuable in the future if we discover some new things that “give us the ick” (gaming, streaming music and videos, washing dishes the wrong way, etc.).

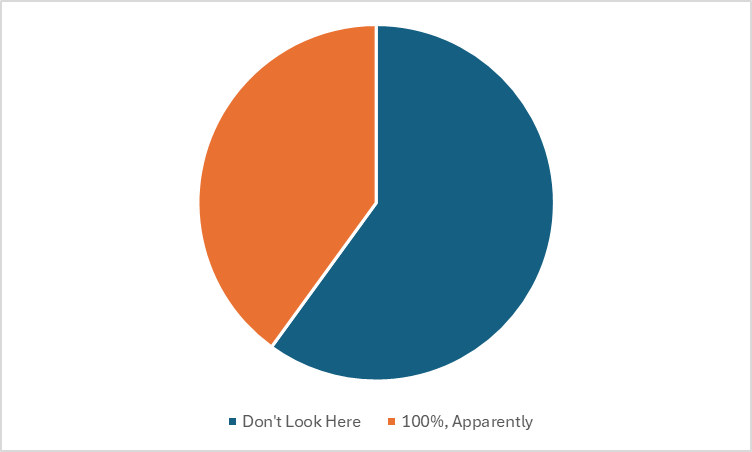

Numbers Are Boring

Readers are bored by cliched things like “numbers” and “proportions”. If you absolutely must include a number, for example by claiming that the training of LLAMA 2 (Facebook’s previous generation of generative AI) produces 539 tonnes of CO2 equivalent, recognise that you have now fatigued your readers. Under no circumstances should you introduce another number to your article.

It is in poor taste, and tedious to boot, for an author to compare this number to something like “the amount of CO2 produced by a more worthy activity, e.g. flying your upper-middle-class ass to New York to London and back” (around 3.2 tonnes of CO2 equivalent for a round trip per passenger, if you must know, counting radiative forcing, another boring concept). Dull and quotidian! There are 21 flights per day on this route and 3,878,590 seats in total per year. Imagine how tired and uncomfortable your reader will become if they are forced to multiply out these numbers and compare them to the impressive sounding “539 tonnes of CO2 equivalent”. Jeez, what is this, math class?

If You Must Compare, Use Households

A wonderful standard is to compare the usage of a large-scale AI model, used by millions of people, to some number of homes. As we all know, household usage of energy consumes the lion’s share of total energy consumption (in Australia, the residential sector uses 11% of total energy, way larger than transport at 47% or industry at 33%). So instead of reporting this tedious “539 tonnes of CO2 equivalent”, instead, report that ChatGPT is “already consuming the energy of 33,000 homes”. You might even get an article in Nature: https://www.nature.com/articles/d41586-024-00478-x

Again, it is in poor taste to mention that the US has 145 million homes. Don’t do that.

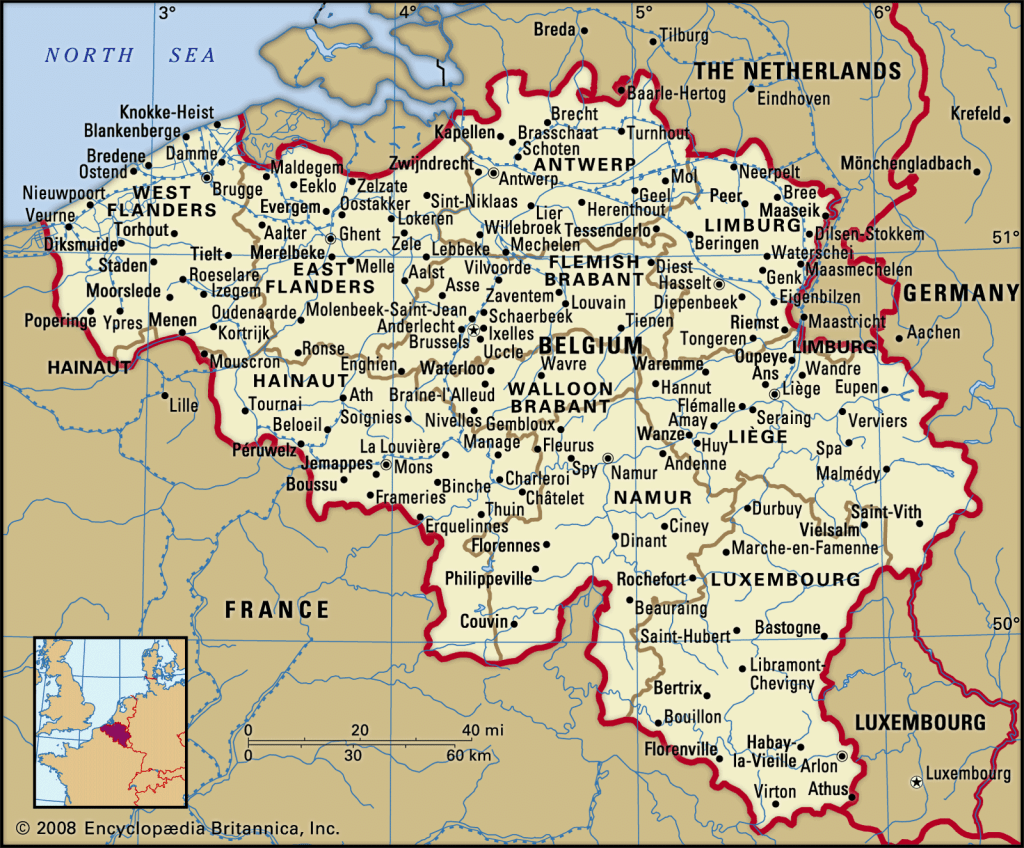

Everyone Knows How Big Belgium Is, Right?

Recognise that your readers – inveterate travelers all – love geography as much as they hate math class. Instead of a boring number, why not compare the amount of energy used to some manageable and familiar metric, such as “the total amount of energy used by Denmark” (or “Belgium”, if you must). Your readers almost certainly have a good sense of the population of these countries and how it compares to global population.

Special bonus points will accrue to you if you can work Lesotho or Andorra into your article; shoot your shot. It’s high time these little countries get a mention. Perhaps you could use your frequent flyer miles to visit.

Live In The Future

As the sage Criswell intones at the beginning of the movie Plan 9 from Outer Space, “We are all interested in the future, for that is where you and I are going to spend the rest of our lives.” As such, instead of reporting what is happening now (tiring, lame, predictable), consider using an estimate from the future (exciting, forward-looking, flexible). Both this, and the above technique, are used impressively in UNEP’s article on this matter: https://www.unep.org/news-and-stories/story/ai-has-environmental-problem-heres-what-world-can-do-about

The Part Can be As Big, Or Bigger, Than The Whole!

Please keep in mind that readers are likely to be wearied by nerdy things like descriptions of “data centers” or “global textile use”. To simplify matters, do go ahead and assign all “data center” energy use to generative AI. It’s quite likely that the other stuff happening in the “data center” isn’t very important or significant anyway.

If you want to apply this exciting rhetorical technique to “fast fashion” (see also: https://www.greenpeace.org/international/story/62308/how-fast-fashion-fuels-climate-change-plastic-pollution-and-violence/), consider ascribing more emissions to the subsector of “fast fashion” (“10% of global emissions”) than are produced by the sector that contains fast fashion (6-8% is the estimate of the textile industry). Again, have a care for your readers attention span. Tedious discussions about how the global textile industry comprises multiple sectors (apparel being 60% of the global textile industry) and how apparel is not, in fact, all “fast fashion” will only serve to bore your readers. Remember that your readers will only be comforted to hear that “fast fashion produces more CO2 than the transport sector”.

Think Local

A data center might not seem that impressive in its global impact, but be sure to narrow the metric down until it is. You will almost certainly discover that some data center consumes “30% of the water” in the county in which it’s located. What a discovery! Your readers will only be delighted by your agility as you hop from global issues to local ones (if, at some later date, we decide that aluminium “gives us the ick”, this will be useful to critique aluminium smelters, which almost certainly consume the vast proportion of energy in their neighborhood).

Don’t Cite Primary Sources

Don’t give in to the temptation to cite a primary source. Instead, go ahead and cite something that doesn’t cite primary sources either. This will give your readers the opportunity to follow a long chain of citations, a thing people love to do. Excitingly, they may end up at the end of the chain and discover that your primary source simply estimated (a scientific term for “made it up”) some environmental impact or provided a range of numbers. What an opportunity for discovery you are providing! A good approach here is to simply cite the top Google hit for your important information, without following any citations at all. Nothing is more convincing than a long chain of thinly researched posts.

Everyone in Tech is Trustworthy (When They Agree With You)

A wonderful aspect of writing these articles is that it’s very easy to find people in the technology sector who will echo your sentiments. This is because they genuinely want to save the planet, and nothing to do with the fact that they are promoting their own twist on the technology in question (or some adjacent technology, e.g. a fusion reactor). Anyone who thinks the latter is a dyed in the wool cynic and should be ashamed of themselves.

Conclusion: Book A Flight, You’re a Eco Hero Now

I hope my readers enjoyed this article. Remember, if you avoid “icky” things like generative AI and fast fashion, you are now an eco-warrior. Feel free to eat meat, book flights to Europe (treat yourself to business class!), drive everywhere and heat and cool a large house – you’ve done your bit to save the planet.

[ postscript: sarcasm aside, a lot of AI is definitely icky (none of the above should be considered a blanket defense of AI), and cryptocurrency mining is genuinely bad for the environment ]

(the pictured dish is apparently materials for

(the pictured dish is apparently materials for